1. Background#

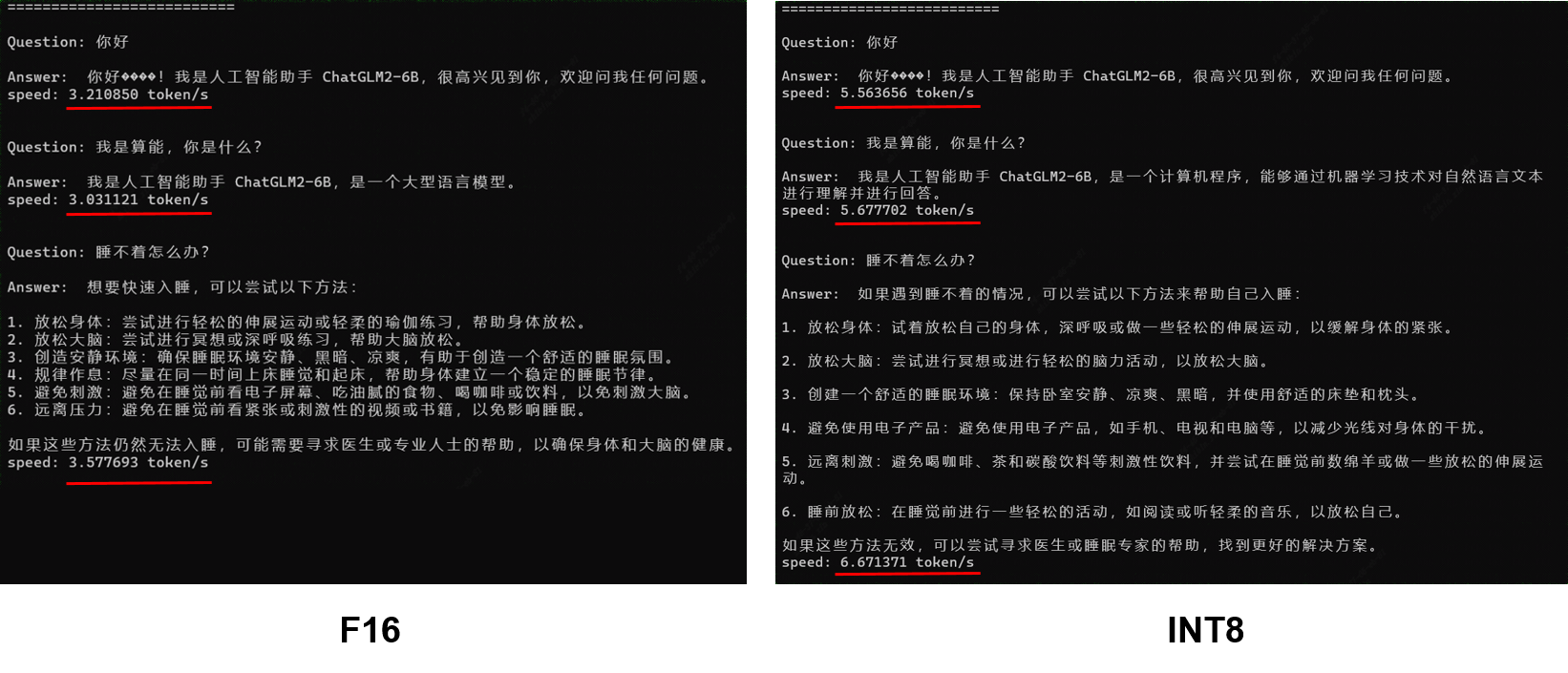

In July 2023, we completed the deployment of ChatGLM2-6B on the BM1684X processor by static graph approach, with F16 quantization mode and 12GB model size. The average speed is 3 token/s (ChatGLM2-6B Workflow Analysis and TPU-MLIR Deployment). In order to further enhance the model’s inference efficiency and reduce the required storage space, we performed INT8 quantization deployment on the model.

2. Quantization Approach#

Firstly, the existing INT8 quantization approaches in TPU-MLIR was not suitable for direct application to LLM. This is primarily due to the high time and computation cost associated with both PTQ and QAT. Calibration for one round of PTQ on LLM could take few days. Additionally, the errors introduced by quantization cannot be effectively mitigated in LLM, ultimately resulting in a significant loss of accuracy.

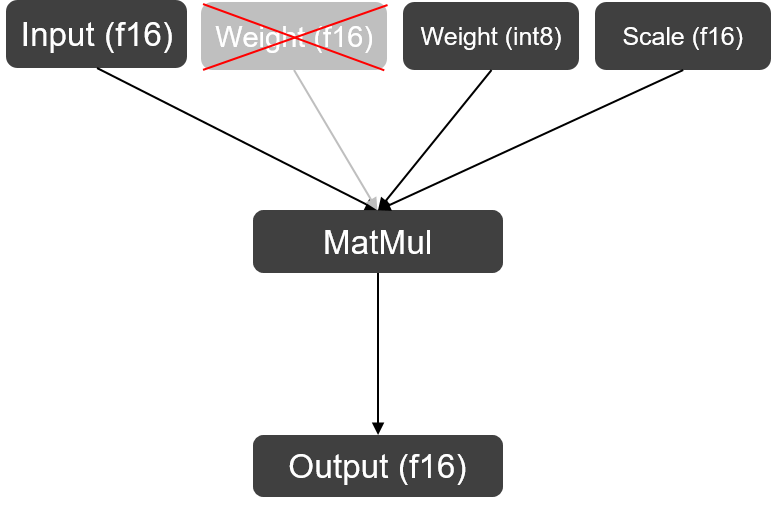

In terms of the quantization approach, we adopted the W8A16 strategy used in ChatGLM2 official repository. This strategy quantizes only the weights of the Linear Layers within GLMBlocks in per-channel granularity. During computation, the weight will be dequantized back to F16 before performing matrix multiplication with input. Since the differences in weight values within Linear Layers of LLM are very small, which is INT8 quantization-friendly, the cosine similarity between quantized and unquantized results can be over 99%.

W8A16 MatMul#

3. TPU-MLIR Implementation#

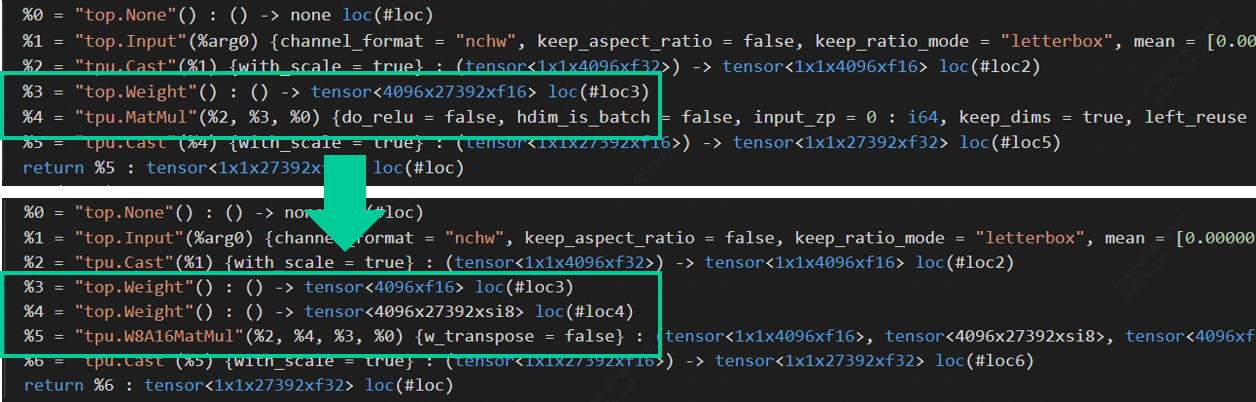

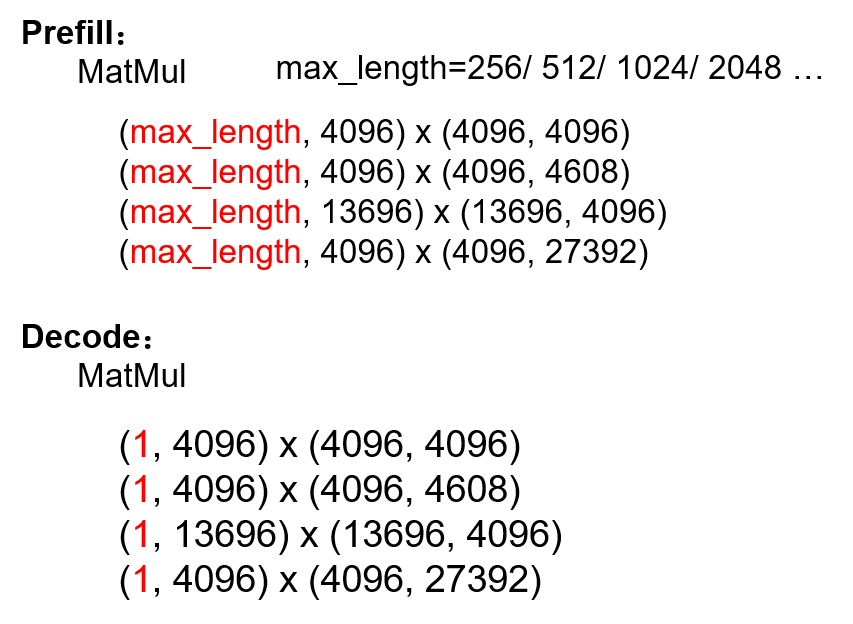

During the lowering phase from the Top to the Tpu layer, the compiler automatically searches for MatMul operations in the model where the right input is 2 dimension weight. It then replaces these MatMul operations with W8A16MatMul operators. This is mainly done to distinguish them from MatMul operators where both the left and right inputs are activations (mm, bmm, and linear layers are all unified as MatMul operators within the compiler). Taking one of the MatMul operators in ChatGLM2 as an example: $L = (max_length×4096×f16), R = (4096×27392×f16)$, after quantization, the weight size is reduced from 214MB to 107MB, and the additional $Scale (4096×f16)$ occupies only 0.008MB of storage space, achieving a nearly 50% reduction in size. You can find the operator replacement source code and the weight quantization source code in the TPU-MLIR repository.

Op Replacement in TPU-MLIR#

4. Principles of Acceleration#

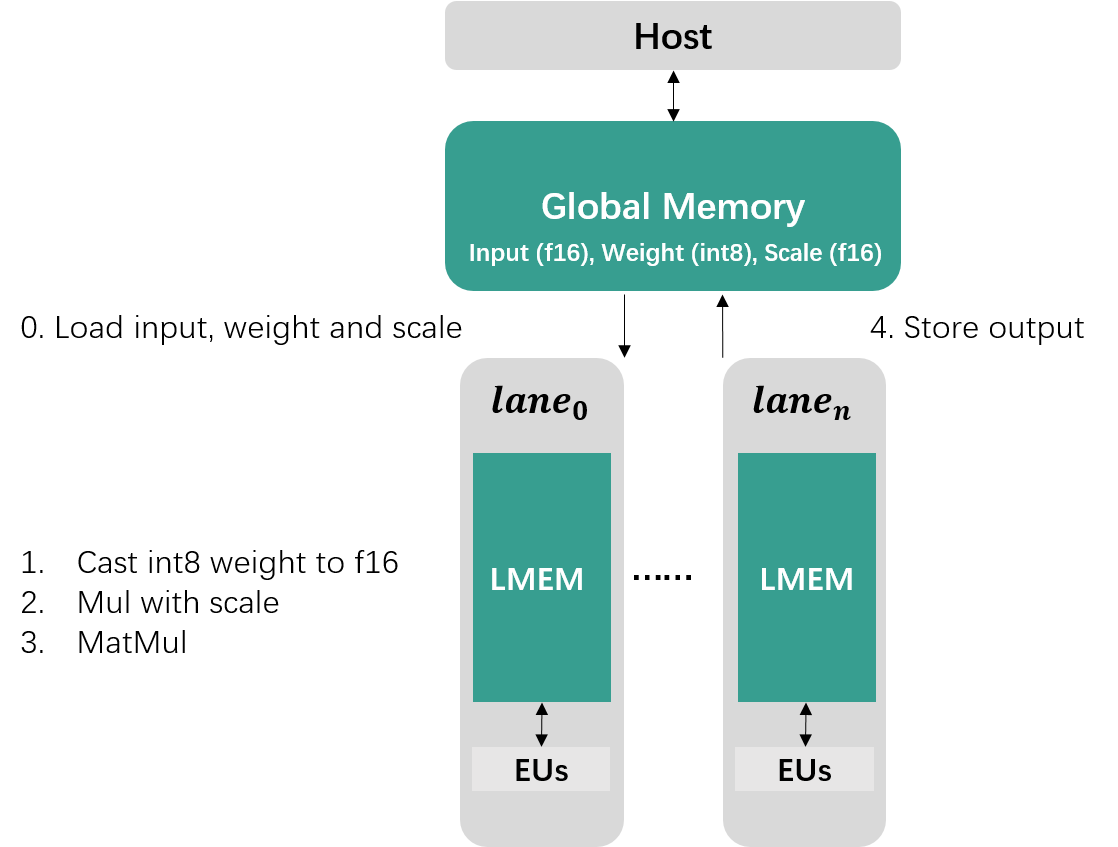

The previous section discussed quantization, which achieved a reduction in storage space. However, the acceleration primarily relies on the implementation of the W8A16MatMul backend operator. If you are not familiar with the TPU architecture, you can refer to TPU Principles Introduction (Part 1) and TPU Principles Introduction (Part 2). According to the current TPU architecture, the computation process for W8A16 mainly consists of five steps as shown in the figure below:

W8A16Matmul Computation on TPU#

Due to the limited space in Local Memory, large data typically needs to be divided.Loading, computation, and storage are performed in batches. In order to improve efficiency, we often use the parallelism of GDMA and BDC instructions to perform data transfer and calculation at the same time.

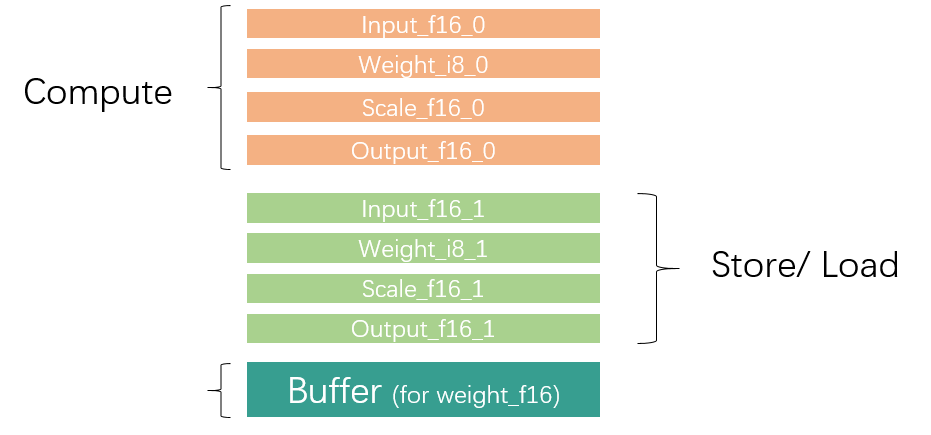

Therefore, the Local Memory is generally divided into two regions within the same loop: one region is used for data computation, while the other stores the results calculated in the previous loop and loads the data required for the next loop, as shown in the figure below.

Local Memory Partition#

The equation of matrix multiplication:

$$

𝑌_{𝑟𝑜𝑤×𝑐𝑜𝑙}=𝐿_{𝒓𝒐𝒘×𝑖𝑛𝑛𝑒𝑟}×𝑅_{𝑖𝑛𝑛𝑒𝑟×𝒄𝒐𝒍}

$$

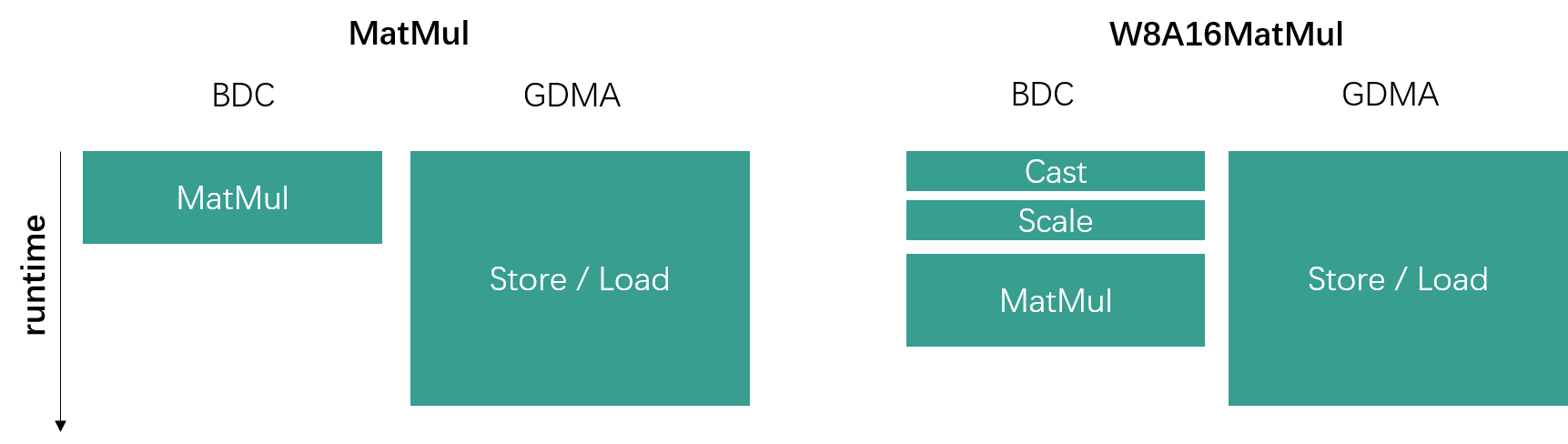

When the amount of data in the L matrix is small, the performance bottleneck mainly lies in the data loading of the R matrix, that is, the data loading time is much longer than the computation time. W8A16 can reduce the total amount of data transfer of the R matrix to half. In addition to the additional Cast and Scale operations, they can all be covered by data transfer, so it will not affect the overall running time, as shown in the figure below.

GDMA and BDC parallel#

In summary, when $L_{row}$ is smaller and $R_{col}$ is larger, the performance improvement benefits from W8A16 are greater.

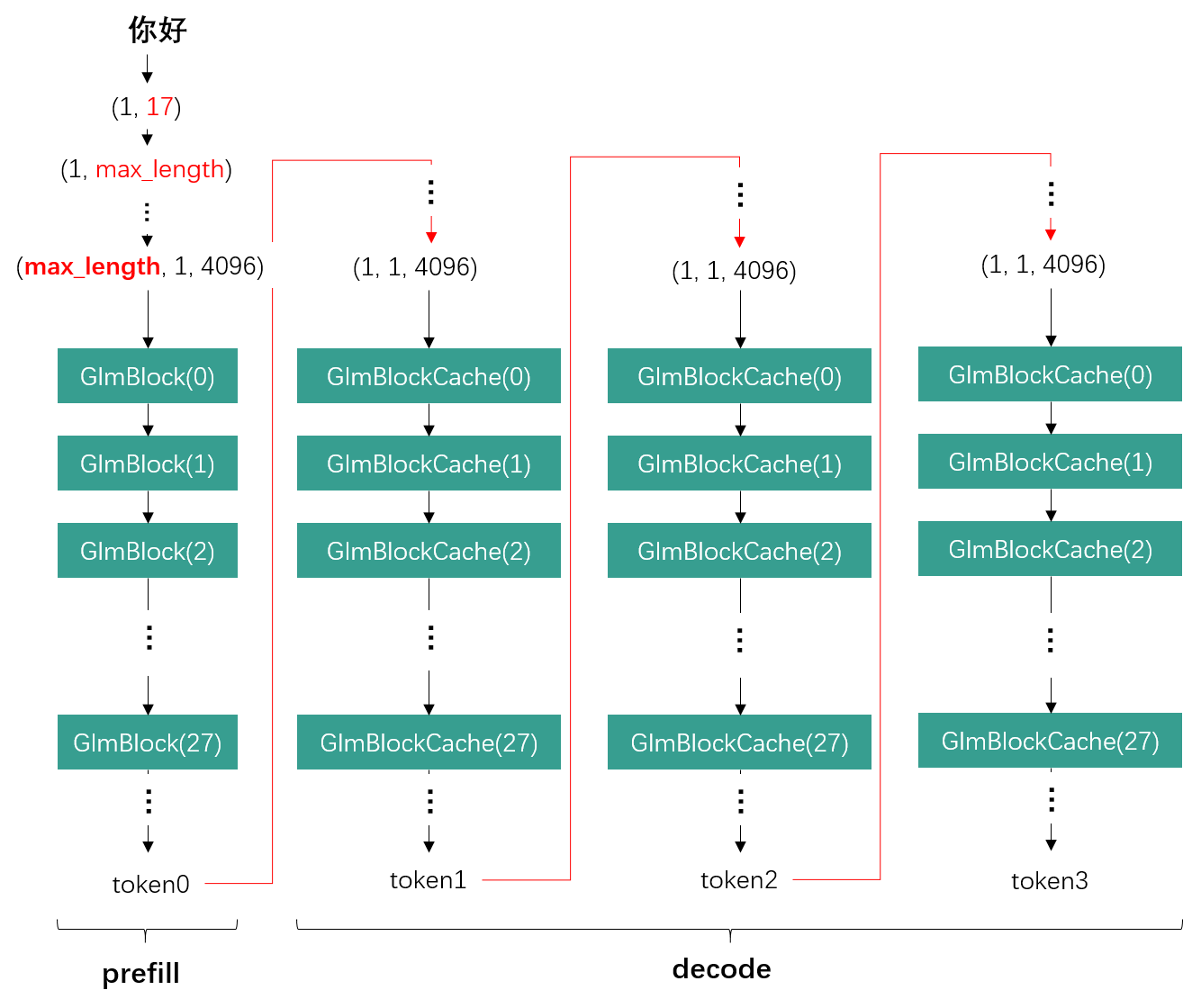

From the perspective of LLM, taking ChatGLM2 as an example, a complete inference process is divided into one prefill round and multiple decode rounds. In the prefill phase, based on our current static graph approach, input vectors are padded to the maximum text length supported by the current model (e.g., 512, 1024, 2048). In the decode phase, only one token generated in the previous round is taken as input, so the input size remains fixed.

ChatGLM2 Inference#

Therefore, as max_length becomes longer, GLMBlocks receive larger input data volumes, and the $L_{row}$ of the Linear Layer also becomes larger. This can lead to less performance improvements from W8A16. However, in the decode phase, since $L_{row}$ remains at 1, W8A16 can bring a significant performance boost.

MatMuls in ChatGLM2 prefill and decode phase#

5. Showcase#

After applying W8A16 quantization to ChatGLM2-6B, the overall performance is as follows:

- Speed: The answer generation is 70% faster.

- Accuracy: While there are slight differences in answers compared to F16, answer correctness is still guaranteed.

- Model Size: Reduced from 12GB to 6.4GB.

Result Comparison#